A Reader's Terminology Guide to Artificial Intelligence

Intelligence:It seems to us that in intelligence there is a fundamental faculty, the alteration or the lack of which, is of the utmost importance for practical life. This faculty is judgment, otherwise called good sense, practical sense, initiative, the faculty of adapting one's self to circumstances." -Alfred Binet 1905 (translated 1916) [1]

Learning: "A computer program is said to learn from experience E with respect to some class of tasks T and performance measure P if its performance at tasks in T, as measured by P, improves with experience E." - Tom Mitchell 1997 [2]

"Intelligence is the ability to adapt to change." - Fake but reasonable quote falsely attributed to Stephen Hawking.

As an attentive reader of the news you find yourself bombarded with messages about AI and the growing scourge of automation. Coverage of interesting research often begins with statements of fear, uncertainty, or confusion. Articles even explicitly warn readers of AI as an existential threat. It's easy to fear what we don't understand, but a seed of understanding goes a long way to enabling critical but reasonable responses to the changing world. Replacing future fears with present knowledge allows us to face problems we can actually address, rather than suffer from ambiguous dread about unknowns.

This article aims to alleviate your AI fears, and at the end we will have developed a basic vocabulary allowing reasonable discussion and consideration of the world of AI and its effects on us all.

AI: Artificial Intelligence. A field focused on tasks that involve machines that learn from experience.

AGI: Artificial General Intelligence. Intelligence that is at least as flexible and able to generalize as that of a human. This is also known as strong AI or full AI.

AI Superintelligence: Artificial general intelligence that exceeds human ability in all areas. Hypothetical.

Machine Learning (ML): The field involved in the development of machine-based systems that improve performance on a given task with experience, i.e. machines that learn from experience. This field also includes more mundane-sounding statistical methods such as linear regression and polynomial fits.

Big data/data science: Field concerned with using large datasets to make decisions, often using machine learning tools to do so.

AI winter: A period of increased skepticism, decreased interest, and decreased investment in AI research. The opposite of AI winter is sometimes referred to as an AI spring.

Modern "AIs" are Networks of Machine Learning Functions Working Together.

The term AI conjures up a picture of an intelligent entity like those described in science fiction. In modern usage you might see in the press, an "AI" refers instead to a collection of machine learning functions grouped together in a single structure called a "model", "network," or "graph," depending on who is describing the work. This differs substantially from the sci-fi usage, where "an AI" is more likely to describe a person-like entity. An obvious example is IBM's Watson, which is treated by the press as if it were a bank of servers in a basement somewhere, interacting with the world via a red glowing eye and soothing monotone voice. However Watson is actually a department staffed by thousands of (human) statisticians, engineers, and others that work on data-driven problems.

Likewise, the press also tends to treat "algorithms" as vaguely sentient actors, but an algorithm is just a set of rules for dealing with data. For example, a machine learning model will follow an algorithm to improve its performance on a task for a given dataset. Biased or seemingly malevolent behavior of an ML model is usually the result of bad data or a lack of foresight by the engineers that defined the training algorithm and model structure.

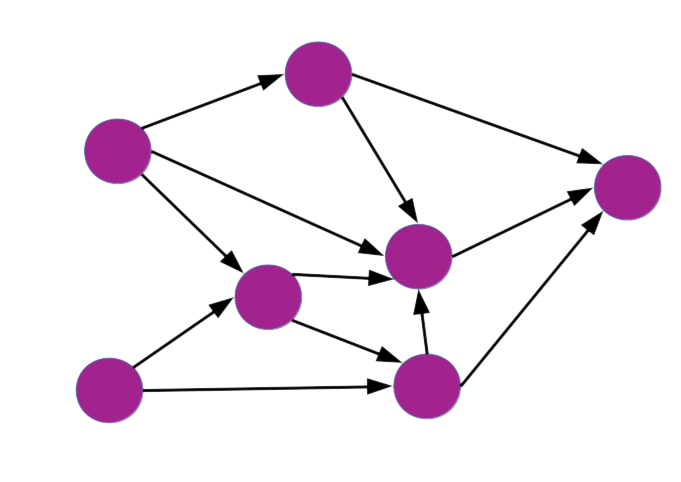

Graph: A graph is a structure of nodes connected by edges that define interactions between them. This abstraction is a useful way to describe machine learning models, and is the terminology used by machine learning packages such as Google's TensorFlow.

Network: Especially in neural networks, a machine learning model may be simply referred to as a network. The meaning is essentially interchangeable with graph, but the preferred terminology when presenting a new machine learning system is model.

Model: A configuration of machine learning tools connected together to solve some task.

Algorithm: A set of rules for dealing with data, similar to a protocol or recipe, and generally used to describe the rules that govern AI/ML functions or agents.

Agent: Any entity that displays agency, that is, the ability to make decisions based on data from the environment. Technically this includes humans and other non-machine actors, but in machine learning it is most often used to describe a model operating in a reinforcement learning environment

A graph is a network of nodes connected by edges, and a useful way to describe machine learning models

When is an AI Just a Model?

The distinction between AI and ML has more to do with the time period than with specific research directions or technological capabilities. Early forays in the 1950s to 1970s led to the expectation that machines would surpass human capabilities in all fields within a decade or two. A failure to measure up to the hype caused interest and funding to dry up, leading to the first AI winter. Later there was a more muted connectionist revival in the 1980s and 1990s that laid the neural network foundations for the current deep learning renaissance. During this time period, the term machine learning was adopted in part as a means for researchers to distance themselves from the over-hyped claims of their predecessors. With deep learning now making strides in myriad applications, the press, corporate, and research worlds have collectively embraced grandiose expectations and the term “artificial intelligence" once again.

Artificial neuron:Beginning with the McCulloch-Pitts neuron aka Threshold Logic Unit, an artificial neuron is a mathematical representation loosely based on the behavior of a biological neuron. In its simplest form, it is a (weighted) sum of some inputs.

Artificial neural network: A group of artificial neurons connected by weights to data inputs and/or each other, with weights determining the strength of influence between different nodes. One of the first implementations of an artificial neural network was Frank Rosenblatt's Perceptron.

Connectionism: An older term for the branch of AI/machine learning concerned primarily with neural networks.

Deep learning: Machine learning that relies on stacks of neural networks, aka Multi-Layer Perceptrons (MLPs). The threshold for how many layers are required to constitute a "deep" model is a matter of opinion, but a good rule of thumb is at least seven.

Modern Technology Accelerates Classic Learning Techniques

Many of the techniques (e.g. back-propagation) used by modern deep learning models were developed during the 1980s/1990s, with the difference between then and now largely coming down to increased computational power and large datasets. In the early 2010s, it became readily apparent that Graphics Processing Units, developed for the video game and rendering markets, could run the mathematical operations describing neural network models rapidly and in parallel. The GPU advantage first became obvious in computer vision, fanning out into a wide variety of vision applications following the GPU-trained AlexNet's 2013 success in the ImageNet classification contest. Image classification remains an excellent starting point for learning about deep learning as an aspiring practitioner or informed lay-person.

GPU: A Graphics Processing Unit is a specialized piece of hardware for rapidly performing graphics operations in parallel. They also happen to be very good at performing mathematical operations such as matrix multiplication or convolution in parallel.

Matrix Multiplication: Mathematical operation that outputs sums of column/row products of two matrices. In machine learning, a matrix multiplication conveniently describes the connections between two layers of artificial neurons.

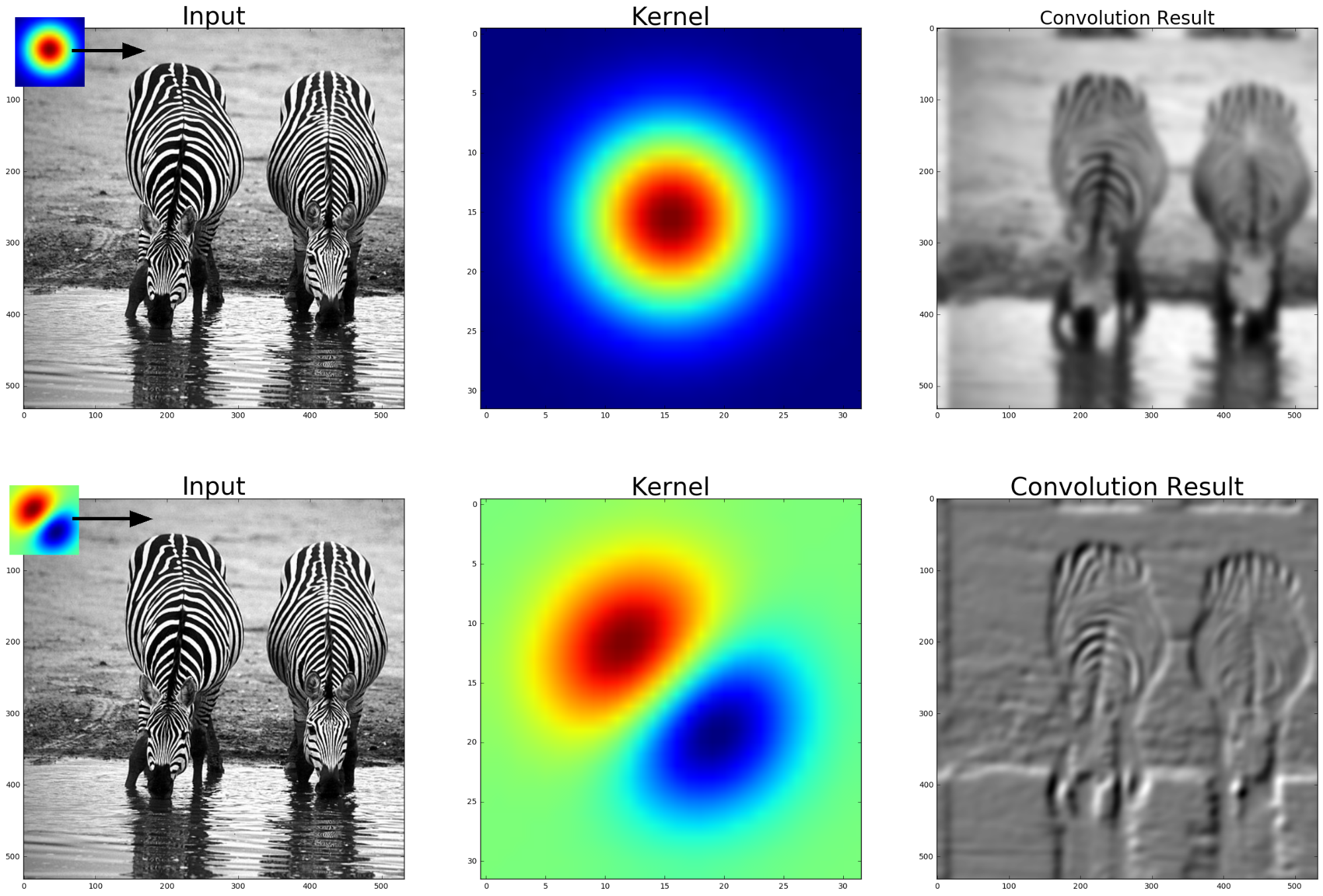

Convolution: An operation multiplying an input image or vector with a sliding weights kernel, the accumulated results of transmission by a sliding window. In machine learning convolution is used for weight-sharing vastly decreasing the number of parameters needed to describe a model.

Convolution kernel: A small group of shared weights applied to every point of a layer or input data.

Gradient descent: The process of following the slope of an error function to find the minimum (or at least local minimum) error for a given task.

Back-propagation: Algorithm for assigning credit for error through multiple layers of a neural network using the chain rule of calculus.

Convolution is a mathematical operation used in many state-of-the-art deep learning models involving sliding multiplication of an input (in this example an image) with a weights kernel. Convolutions and other operations used in deep learning can be readily parallelized for fast computation on GPUs.

Supervised Learning: A task that depends on curated data, such as images classified by their content. An ML model uses input data to infer the data labels, e.g. identifying objects in image classification.

Unsupervised Learning: Models learn a task based on unlabelled data, for example they may try to learn efficient encodings of input data (as in autoencoders) or learn to generate facsimile data (as in Generative Adversarial Networks).

Natural Language Processing: Any task involved in processing language data such as text or speech. This includes machine translation between foreign languages or text generation.

Reinforcement Learning Tasks defined by an agent interacting with a defined environment to earn rewards. This AI sub-field is responsible for much of the game-playing advances in recent years.

Bias: A model is said to suffer from bias error if its outputs depend more on the model itself than on the input data (like a self-driving car that always turns left).

Variance: A model may fail due to variance problems if it depends too much on the specific dataset used for training, making it generalize poorly to test datasets and real-world applications. This is often called overfitting.

Current Trajectory and future of Deep Learning/AI/ML

With increased interest and investment, we can only expect the field of deep learning to accelerate as more minds work on problems in the field. New generations of GPUs are increasingly likely to cater to the deep learning crowd, and new types of hardware are being built specifically for AI. Together we've developed a basic understanding and corresponding vocabulary of AI and machine learning. This should allow us to read AI/ML related news with a critical eye and ability to discuss realistic implications of current research. The press may prefer their readers to dread an ambiguous AI apocalypses, but with a little knowledge and curiosity we can engage in meaningful debate about clear and present effects of AI/ML on our everyday lives. It's time to stop worrying and learn to love AI for what it can accomplish while putting in place common-sense safeguards against negative and unintended consequences.

[1] Alfred Binet. New Methods for the Diagnosis of the Intellectual Level of Subnormals. L'Année Psychologique, 12, 191-244. (1905). Translation by Elizabeth S. Kite first appeared in 1916 in The development of intelligence in children. Vineland, NJ: Publications of the Training School at Vineland. https://psychclassics.yorku.ca/Binet/binet1.htm

[2] Tom Mitchell. Machine Learning. McGraw Hill, 1997. https://www.cs.cmu.edu/~tom/mlbook.html

Original image of zebras in the public domain by John Storr.